PyTorch Recipes¶

Recipes are bite-sized, actionable examples of how to use specific PyTorch features, different from our full-length tutorials.

Recipes are bite-sized, actionable examples of how to use specific PyTorch features, different from our full-length tutorials.

Learn how to use PyTorch packages to prepare and load common datasets for your model.

Learn how to use PyTorch's torch.nn package to create and define a neural network for the MNIST dataset.

Learn how state_dict objects and Python dictionaries are used in saving or loading models from PyTorch.

Learn about the two approaches for saving and loading models for inference in PyTorch - via the state_dict and via the entire model.

Saving and loading a general checkpoint model for inference or resuming training can be helpful for picking up where you last left off. In this recipe, explore how to save and load multiple checkpoints.

In this recipe, learn how saving and loading multiple models can be helpful for reusing models that you have previously trained.

Learn how warmstarting the training process by partially loading a model or loading a partial model can help your model converge much faster than training from scratch.

Learn how saving and loading models across devices (CPUs and GPUs) is relatively straightforward using PyTorch.

Learn when you should zero out gradients and how doing so can help increase the accuracy of your model.

Learn how to use PyTorch's benchmark module to measure and compare the performance of your code

Learn how to measure snippet run times and collect instructions.

Learn how to use PyTorch's profiler to measure operators time and memory consumption

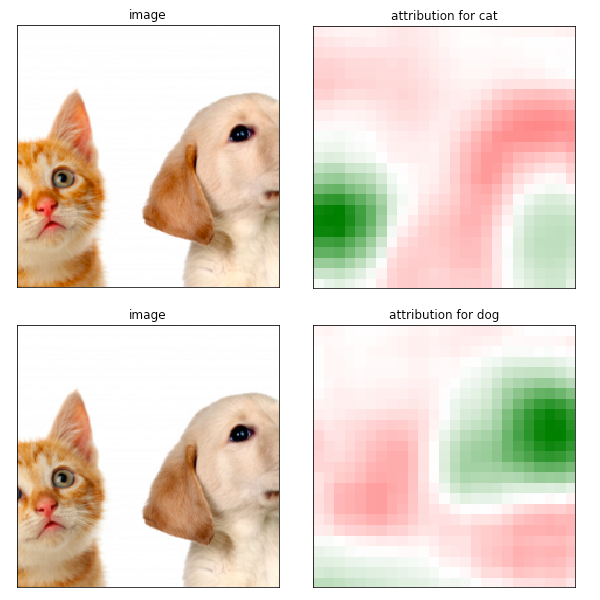

Learn how to use Captum attribute the predictions of an image classifier to their corresponding image features and visualize the attribution results.

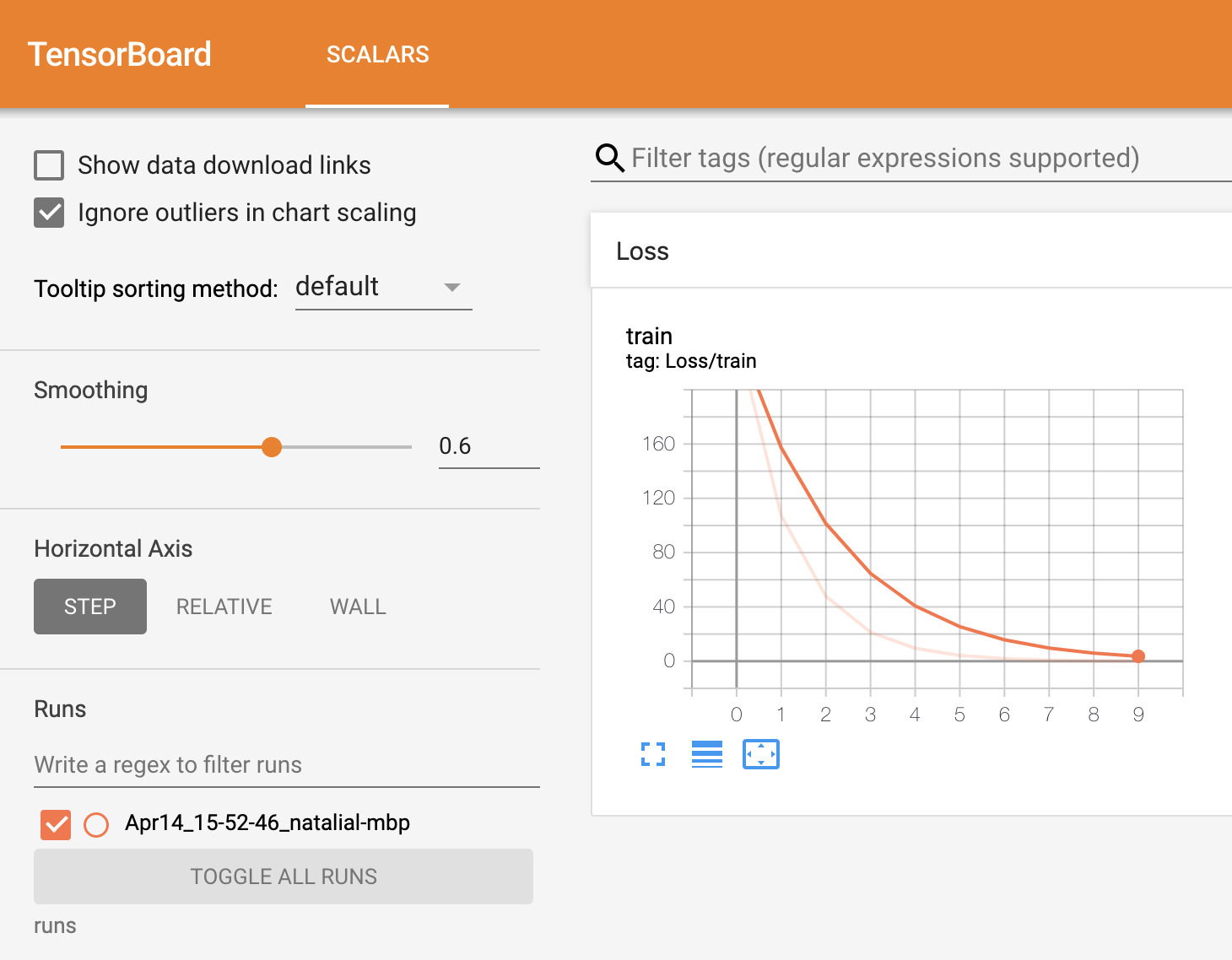

Learn basic usage of TensorBoard with PyTorch, and how to visualize data in TensorBoard UI

Apply dynamic quantization to a simple LSTM model.

Learn how to export your trained model in TorchScript format and how to load your TorchScript model in C++ and do inference.

Learn how to use Flask, a lightweight web server, to quickly setup a web API from your trained PyTorch model.

List of recipes for performance optimizations for using PyTorch on Mobile (Android and iOS).

Learn how to make Android application from the scratch that uses LibTorch C++ API and uses TorchScript model with custom C++ operator.

Learn how to fuse a list of PyTorch modules into a single module to reduce the model size before quantization.

Learn how to reduce the model size and make it run faster without losing much on accuracy.

Learn how to convert the model to TorchScipt and (optional) optimize it for mobile apps.

Learn how to add the model in an iOS project and use PyTorch pod for iOS.

Learn how to add the model in an Android project and use the PyTorch library for Android.

How to use the PyTorch profiler to profile RPC-based workloads.

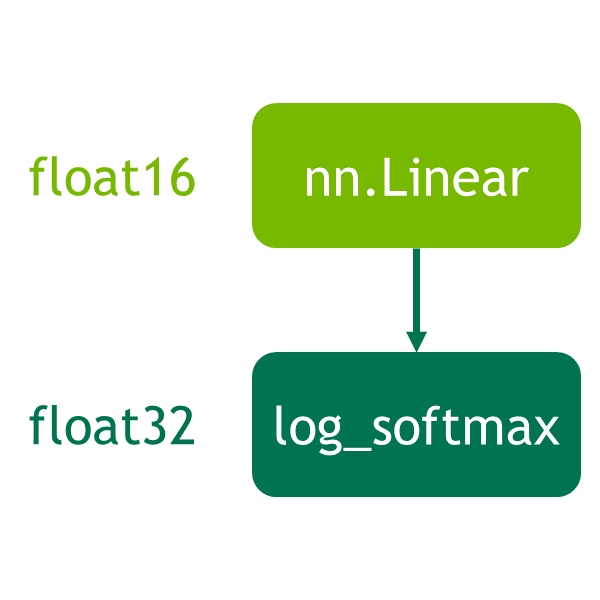

Use torch.cuda.amp to reduce runtime and save memory on NVIDIA GPUs.

Tips for achieving optimal performance.

How to use ZeroRedundancyOptimizer to reduce memory consumption.

How to use RPC with direct GPU-to-GPU communication.